A Bus for Math: An SRE's First Look at GPU Architecture

As SREs, we spend most of our time thinking in terms of latency, throughput, and "how do we make this thing scale." Lately I've been going deeper on a piece of hardware that's been quietly rewriting the rules of throughput: the GPU. Not for rendering game frames, but for the kind of embarrassingly parallel workloads that are eating the modern infrastructure world.

This is what I've learned in my first week of going deep on GPU architecture, CUDA, and why it matters for engineers who care about performance at scale.

The CPU vs GPU Mindset

Here's the simplest way I can put it:

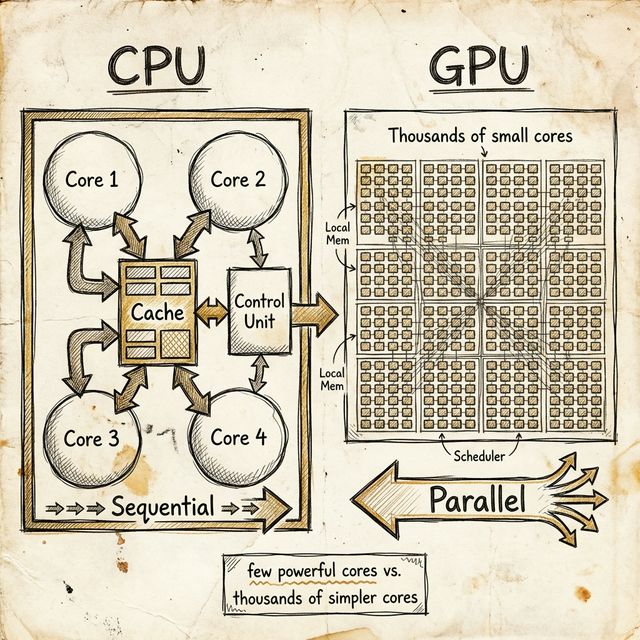

- CPU = a small team of brilliant professors. Each one handles complex, branching logic with ease. Four to eight powerful cores, big caches, sophisticated branch prediction.

- GPU = a massive lecture hall of students who can all do the same arithmetic problem at the exact same time. Thousands of simpler cores executing the same instruction in lockstep.

When you need to update 5 records in a database, the CPU is your tool. It's task-oriented, and it handles branching and conditional logic beautifully. But when you need to search through a terabyte of data for a string, process millions of pixels, or run an engineering simulation? That's where the GPU comes in. The throughput is the point.

GPUs aren't "faster" than CPUs. They're good at a fundamentally different kind of work: data-parallel problems where you do the same operation across a massive dataset.

Enter CUDA (2006)

CUDA stands for Compute Unified Device Architecture. NVIDIA introduced it in 2006 to make GPUs programmable beyond graphics.

Before CUDA, you had to trick the GPU into doing general computation by pretending your data was textures and polygons. Hacky, fragile, painful. CUDA changed the game: write in C, C++, Fortran, or Python and have it execute directly on the GPU.

CUDA is essentially NVIDIA's way of saying: "Here are thousands of cores. Here's a programming model to actually use them. Go."

Is CUDA Right for Your Workload?

Not every problem benefits from a GPU. Here's a simple way to think about it:

| Workload | Use GPU? |

|---|---|

| Update 5 records in a database | ❌ No (task-oriented, sequential) |

| Search through a TB of data | ✅ Yes (data-parallel, throughput-bound) |

| Engineering simulations | ✅ Yes (matrix math, parallelizable) |

| Train an ML model | ✅ Yes (tensor operations on massive datasets) |

| Serve a REST API | ❌ No (latency-sensitive, branching logic) |

The distinction comes down to serial vs parallel algorithms:

- Serial: executed sequentially, one step after another, like reading a book page by page.

- Parallel: break the work into pieces, execute simultaneously on many cores, then combine the results. Like having 1,000 people each read one page and then collating.

CUDA enables you to write programs that leverage the GPU's massive parallelism. You write in familiar languages (C, C++, Python) and CUDA bypasses assembly to give instructions straight to the GPU.

How CUDA Organizes Work

This is where it gets interesting. CUDA introduces a hierarchy that maps software concepts to the physical GPU hardware.

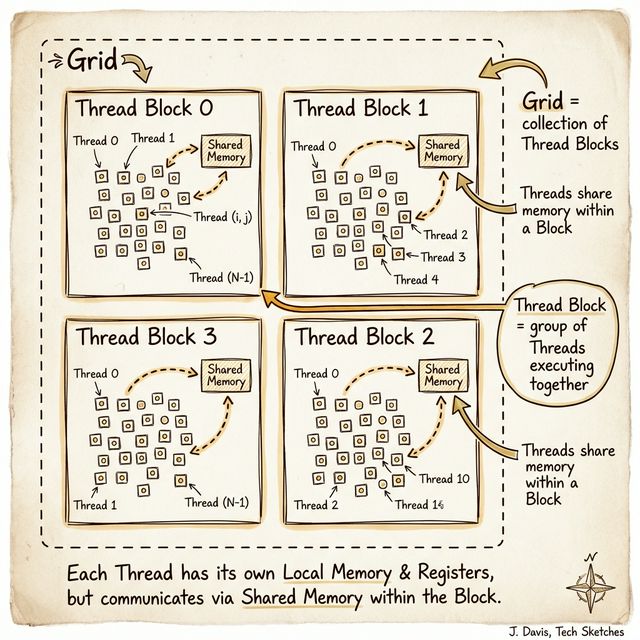

Threads → Thread Blocks → Grids

- Thread: the smallest unit of execution. Each thread runs a copy of the same function, called a kernel.

- Thread Block: a group of threads that execute together. Threads within a block can cooperate through shared memory and can synchronize with each other. The compiler or programmer organizes threads into blocks.

- Grid: a collection of thread blocks. When you launch a kernel, you're launching an entire grid's worth of thread blocks.

Think of it like a massive warehouse operation. Each thread is a worker. Each thread block is a team of workers at the same station who can talk to each other and share tools. The grid is the entire warehouse floor, with all the stations running in parallel.

The Kernel

A kernel is a function that runs on the GPU. Every thread in the grid executes the same kernel, but operates on different data. This "Same Instruction, Multiple Data" (SIMD) pattern is the core of GPU parallelism.

When you launch a CUDA kernel, you specify two things: how many thread blocks you want, and how many threads per block. The GPU's hardware scheduler takes it from there, distributing thread blocks across the available streaming multiprocessors.

The Streaming Multiprocessor: Heart of the GPU

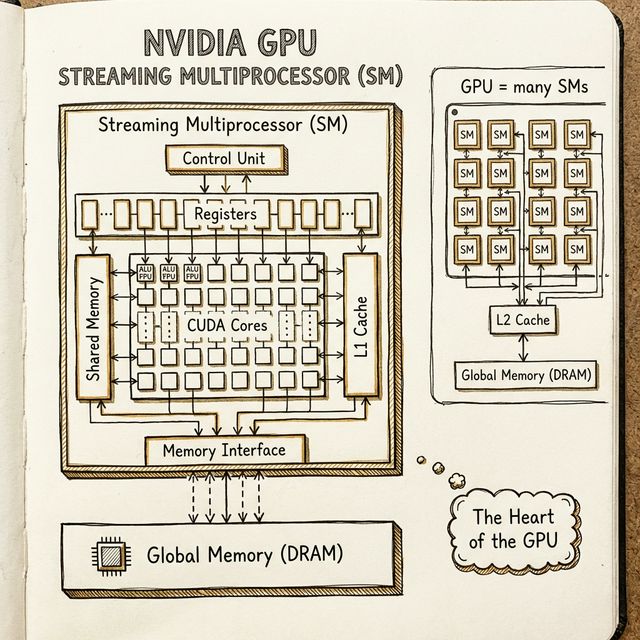

If the thread hierarchy is how you organize your software, the Streaming Multiprocessor (SM) is where that software actually runs.

Each SM is a self-contained processing unit with:

- Its own Control Unit: fetches and decodes instructions

- Registers: per-thread private memory, extremely fast

- CUDA Cores: the actual ALUs that do the math

- Shared Memory: fast, on-chip memory shared between threads in a block

- L1 Cache: local cache for speeding up memory access

The SM is the heart of the GPU architecture. When you launch a kernel, the GPU's scheduler assigns entire thread blocks to available SMs. Each SM can run multiple thread blocks concurrently, and the number of SMs on your GPU determines how many blocks can run at once.

The number of SMs varies by GPU model. A consumer GeForce card might have a handful, while a data center H100 has over a hundred.

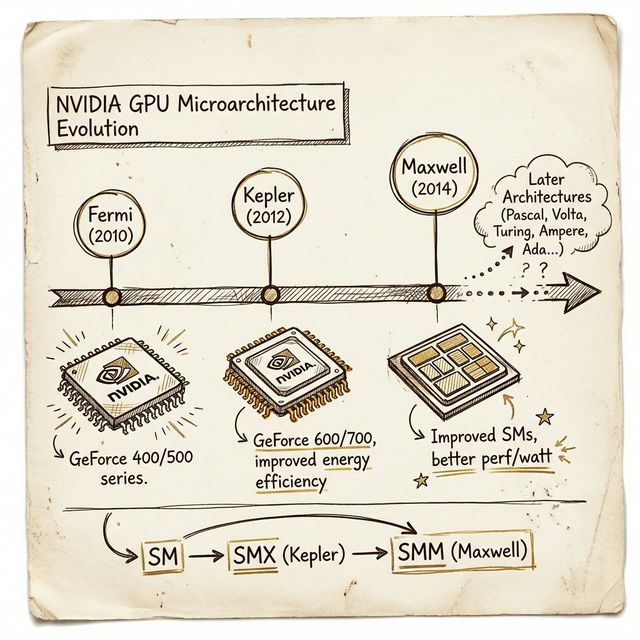

Across NVIDIA's architecture generations, the SM has been renamed to reflect internal redesigns:

- Fermi: just SM

- Kepler: SMX (extended)

- Maxwell: SMM (Maxwell)

The names change, but the concept stays the same: the SM is the fundamental building block of every NVIDIA GPU.

NVIDIA Microarchitecture Evolution

GPU architecture isn't static. NVIDIA's microarchitecture is the specific implementation of their instruction set, and each generation improves on the previous one.

Architecture vs Microarchitecture

These two terms get confused a lot, so let me clarify:

- Architecture (ISA) = the interface. What registers, data types, and instruction types are available.

- Microarchitecture = the implementation. How those instructions are physically executed: pipelining, datapath design, branch prediction.

Think of it this way: the architecture is the API, and the microarchitecture is the implementation behind it. Same interface, different (and hopefully better) internals with each generation.

The Evolution

| Generation | Name | Products | Key Innovation | Sunset |

|---|---|---|---|---|

| 1 | Fermi (2010) | GeForce 400/500 | First "true" compute GPU, unified shader architecture | ~2011 |

| 2 | Kepler (2012) | GeForce 600/700 | Dramatically improved energy efficiency, SMX | ~2014 |

| 3 | Maxwell (2014) | Later GeForce | Redesigned SM (SMM), better perf-per-watt | |

| ... | Pascal, Volta, Turing, Ampere, Hopper, Blackwell | ... | Tensor cores, RT cores, transformer engines |

Each generation isn't just "more cores." It's a fundamental redesign of how the SM works, how memory is shared, and how energy is consumed. When people talk about GPU performance leaps, they're talking about microarchitectural improvements.

What's Next

This is just week one. I'm going deeper next on memory hierarchies (global, shared, local, constant, texture memory), warp execution (how the SM actually schedules 32 threads at a time), and the nuts and bolts of writing your first CUDA kernel.

As an SRE, what I find most fascinating is that the GPU is essentially a bus for math: a massive throughput machine optimized for moving numbers through arithmetic pipelines as fast as possible. The entire architecture, from the thread hierarchy to the streaming multiprocessors, is designed around one principle: do the same thing to a lot of data, all at once.

If you're managing infrastructure that touches ML training, video processing, scientific computing, or any throughput-heavy workload, understanding how the GPU works under the hood will make you a better engineer.

Go be 10x!

Resources

stay in the loop

Get weekly cloud native engineering insights delivered straight to your inbox. No spam, unsubscribe anytime.